Veritas / Symantec Enterprise Vault Alternatives & Migration [MVP Guide]

IT budgets are tight. Now’s the time to look at your Veritas Enterprise Vault email archives and evaluate where you can reduce the costs and how you can unlock value in the cloud.

Table of Contents

- The Cost of Maintaining an On-Premises Archiving Solution

- Beyond Enterprise Vault: Savings and Success in the Cloud

- Option 1: Second-Rate SaaS Archiving

- The Sad Truth of SaaS Security Stats

- Option 2: The Trusted Cloud that You Control

- Migrating Your Data Out of Enterprise Vault

- Considering Journals and Journal Migrations

- Your Migration Questions Answered

This is a great time for your organization to do some much-needed spring cleaning of your on-premises IT inventory, starting with your Veritas Enterprise Vault email archive. Whether you’ve already migrated to the cloud, have plans to migrate, or are still running Enterprise Vault in your on-premises data center or in the cloud, this MVP Guide provides you with the resources to help you make an informed decision.

If you’re an existing Veritas Enterprise Vault user or are planning on migrating your legacy Enterprise Vault archive to Veritas Enterprise Vault.cloud, it would be well worth taking a step back and considering alternatives. Whether your goal was to comply with a wide range of regulatory retention requirements, react to eDiscovery in a legally defensible manner, meet business policies for email retention, or simply reduce the strain on your data center, back in the day Enterprise Vault was the most popular email archiving solution. But with the ever-changing compliance laws and eDiscovery responsibilities, the Veritas Enterprise Vault archive you have been relying on for many years is simply no longer is up to the task.

Regardless of whether you’re using an on-premises or first-generation cloud-based version of Enterprise Vault, you will definitely have noticed that it has fallen behind in addressing today’s regulatory, legal, and business requirements. In addition, like many Veritas Enterprise Vault customers, you’re no doubt concerned about the cost and potential risk to your business of maintaining this increasingly archaic technology.

The Cost of Maintaining an On-Premises Archiving Solution

Whether you’ve already moved to the cloud and are still maintaining your on-premises Veritas Enterprise Vault email archive or have already moved and are leaving your legacy archive in place for ongoing journaling, there’s a high cost associated with your decision. Simply put, by maintaining on-premises computing and storage infrastructure, you’re investing increasing capital in an aging platform that doesn’t meet your current requirements and won’t in the future.

Paying the Price for On-Premise Archives Like Veritas/Enterprise Vault

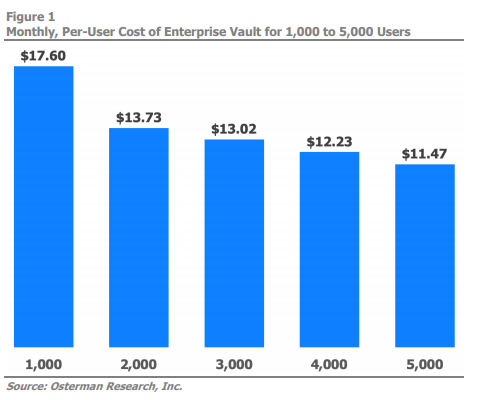

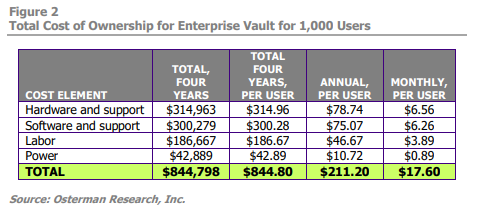

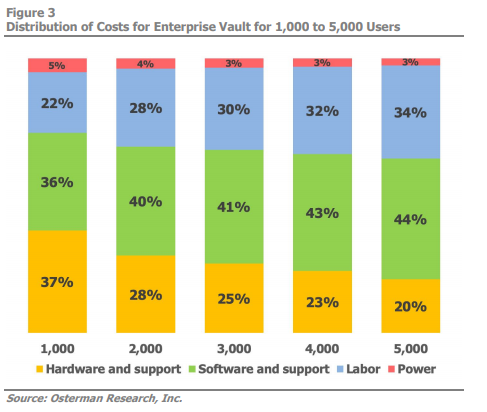

A recent Osterman Research white paper provided the following findings…

- Enterprise Vault is a capable on-premise platform for archiving various types of content, including email, SharePoint data, file shares, etc.

- Keeping your on-premises EV archiving solution active while you are moving the rest of your data center to the cloud does not fit with your digital transformation strategy.

- Enterprise Vault is relatively expensive compared to many alternatives, particularly those in the cloud.

Enterprise Vault itself only will cost more every year as the diagrams below show. Draining funds through software and hardware support, labor and power demands, the increasing total cost of ownership only further makes the case for a cloud-based archiving solution.

Beyond Enterprise Vault: Savings and Success in the Cloud

With cloud-based Platform as a Service (PaaS) solutions, organizations use and pay for exactly what they need, not paying for future estimated requirements, providing obvious savings. But, with Enterprise Vault, your archives are stuck languishing on servers that you pay support costs to maintain and run. No doubt you would jump at the chance to only pay for what you use, which is what makes the cloud such an attractive prospect. Better still, those organizations that adopt a “pay as you go” (Operational Expenses - OpEx) model are able to engage the resources and expertise of cloud hosting experts to take advantage of new capabilities faster without the needed investments, and realize cost benefits throughout the year.

It seems like a no-brainer. You’re aware you should move away from aging solutions like Enterprise Vault and you know the cloud is the place to be. But what are your options?

Essentially, there are three possible strategies:

- Stick with what you have and continue to be limited by an on-premises solution like Enterprise Vault.

As you continue on with your cloud transition strategy, while maintaining your on-premise EV infrastructure, you’ll quickly realize the competitive disadvantage you are in. - Move your Veritas Enterprise Vault email archives to a Software-as-a-Service (SaaS) archiving solution in the “kinda’ cloud”

Because they’re offered as the easiest possible cloud solution, SaaS solutions have become very popular over the years. Additionally, they are popular because they were the ONLY cloud solution available. But taking the easy option doesn’t offer you the increased control or flexibility needed in today’s changing regulatory, legal, or business environments. Due to the fact that they aren’t true hyperscale clouds, like those of Amazon or Microsoft, SaaS archiving products are extremely restrictive and cannot be customized for real-world situations (otherwise known as “One-size-fits-all). The limitations you’ll encounter include a lack of control over security, narrow eDiscovery options, and costly options to move your archive out if dissatisfied with the SaaS provider. In essence, migrating your Enterprise Vault archives to a SaaS solution only puts you in a marginally better place than you were in with on-premises solutions. - Embrace the true power of PaaS

To truly benefit from a move away from the on-premise Enterprise Vault archive you need access to a next-generation cloud platform that gives you complete control. While SaaS vendors talk a lot about your data benefiting from being in their cloud, the truth is they’re not (and can’t) providing the cloud’s full value. Nor are they giving you much control over the security of your archived data. On the other hand, cloud archiving using native file formats, providing complete control over security protocols and process, on-demand scalability, the ability to designate specific geographic locations for data and access, and automatic updates offering latest and greatest technology developments (with on-premise platforms, you must wait for the vendors update cycle to realize the new technologies. Those are the benefits of true cloud-based PaaS solutions offers.

With that in mind, we can safely say that the first strategy doesn’t make sense for most companies. So, let’s take a look at the two remaining possible strategies open to you: the halfway house versus the flexible, future-proof solution.

Option 1: Second-Rate SaaS Archiving

The Halfway House – Why Migrating From Enterprise Vault to Enterprise Vault.cloud Isn’t the Ideal Solution

SaaS archiving platforms have been around for a while now, with legacy vendors like Veritas (as well as others like Smarsh, Mimecast and GlobalRelay) making use of the SaaS model to deliver one-size-fits-all archiving solutions. In the early days of SaaS, cloud solutions such as these had some major selling points and they were the only cloud solutions available. They helped businesses to do away with ongoing on-premises infrastructure costs in favor of a convenient subscription service that required little input on their part. The problem is, they weren’t designed to be flexible or compatible with the true, hyperscale cloud requirements of today.

With “agility” and “flexibility” key buzzwords in technology over the last few years, this obvious limitation quickly highlighted the apparent problem. As the world rapidly changes, especially in relation to technology and data security, SaaS-based archives aren’t designed or equipped to cope with shifting regulations and constantly emerging security threats, or the need for businesses to scale up and down based on business requirements, as the hyperscale cloud is.

With “agility” and “flexibility” key buzzwords in technology over the last few years, this obvious limitation quickly highlighted the apparent problem. As the world rapidly changes, especially in relation to technology and data security, SaaS-based archives aren’t designed or equipped to cope with shifting regulations and constantly emerging security threats, or the need for businesses to scale up and down based on business requirements, as the hyperscale cloud is.

While SaaS solutions might once have been the easiest or only way to go, their static feature sets and one-size-fits-all design restrictions now limit security, access, accountability, and direct control over your data.

Most SaaS-based archiving vendors will tell you they offer the best turn-key cloud archiving platform but, in reality, they only introduce the same issues you experience with legacy on-premises infrastructure, such as the lack of an easy way to harness analytics, Machine Learning, and AI. In other words, the SaaS “lowest-common-denominator” design and architecture cannot meet today's needs.

Sharing Your Cloud with Others

Many SaaS issues stem from a basic drawback of the model – the fact that most SaaS-based archiving vendors don’t own their own datacenters. Instead, they rent space in what’s called a multi-tenant cloud. In reality, the SaaS vendor is subletting their cloud tenancy to you and offer a single application hundreds of other companies.

Not only does this situation block the addition of new much-needed capabilities, but it also raises major security concerns. For instance, that fact that as a customer, you don’t have control of the encryption keys used to secure your data. This, coupled with a lack of control over the security processes and measures in place, means your company’s data could be inadvertently shared with all of the SaaS vendor’s other clients in the same multi-tenant cloud. That significantly increases the chances of unauthorized access i.e. viruses and ransomware, data corruption, or deletion. It also means the SaaS vendor can access your company’s sensitive data at any time or provide access to government agencies via secret subpoenas – without you ever knowing.

Vendor Data Ransom

While security is a major issue for all organizations, subscription costs and total cost of ownership is always a close second in importance. Most SaaS vendors will convert and store your company’s files in their own proprietary format that only their tools can access, essentially creating what amounts to a data prison. When you want to eventually move your data somewhere else, the vendor can charge you huge “reconversion fees” to move your data away from their service. On top of this data ransoming, the proprietary format also means you can’t use your data with AI or Machine Learning technology to provide content-based auto-classification and supervision for more accurate information management.

Before you consider a migration to Enterprise Vault.cloud, we suggest you check with the vendor:

- Do you have to pay a reconversion fee to extract your data out of your Enterprise Vault.cloud archive?

- What is the cost per GB for the extraction?

- Are there limitations on the amount of data I can search and extract per day?

Possible SaaS Archiving Issues:

- Limited or no control over your data’s geographic location – data sovereignty

- Little to no control over security capabilities

- Proprietary file formats that limit your ability to move your data without paying exorbitant fees - data ransoming

- Limited search and review options for audio, video and other non-email files

- Data analysis and eDiscovery functionality is limited to “lowest common denominator” vendor-provided tools

- No access to encryption keys used to encrypt your data

The Sad Truth of SaaS Security Stats

In a recent survey of global IT executives, including VPs, Directors, and members of the C-suite at major corporations, only 19% of those surveyed believed 75% or more of their SaaS vendors met all their security requirements. 70% stated they had been forced to make at least one security exception for a SaaS vendor. While many of these organizations are likely using popular SaaS products like Microsoft Office 365 and Salesforce, where the size and standing of the vendor might make the business more amenable to accepting a perceived lower risk, the clear takeaway is that many SaaS solutions don’t provide the security standards and flexibility modern organizations require.

On the topic of encryption keys, an astounding 95% of respondents believed it was important to control their own encryption keys, and 81% were uncomfortable with their SaaS vendors controlling them. However, 74% of those surveyed said they did not control the encryption keys for the majority of their SaaS solutions. This is a worrying statistic and one that many organizations will have to take steps to reverse as regulations continue to tighten and the threat of cybercrime grows. To that end, 92% of executives said they would require more security customization in the future, with 63% of them planning to retire current SaaS applications that don’t provide them control over encryption key creation and management.

On the topic of encryption keys, an astounding 95% of respondents believed it was important to control their own encryption keys, and 81% were uncomfortable with their SaaS vendors controlling them. However, 74% of those surveyed said they did not control the encryption keys for the majority of their SaaS solutions. This is a worrying statistic and one that many organizations will have to take steps to reverse as regulations continue to tighten and the threat of cybercrime grows. To that end, 92% of executives said they would require more security customization in the future, with 63% of them planning to retire current SaaS applications that don’t provide them control over encryption key creation and management.

These statistics paint a clear picture of the security landscape and the risk that the one-size-fits-all approach of SaaS vendors introduces. As the trend for security customization continues and scrutiny over data access and handling increases, SaaS solutions will become increasingly less palatable for organizations’ risk mitigation efforts. Instead, more secure and customizable solutions will be a major focus.

Stunted Security: SaaS Vendors Don’t Let You Control…

- Physical data center security

- Encryption keys

- Custom compliance reporting

- Cloud-native directories

- Firewall and firewall rules

- Application security analysis

- Identity management/Access controls

- Threat detection

Option 2: The Trusted Cloud that You Control

There is a way to retain complete control over your sensitive archived data while applying your own security standards and protocols. To achieve this, you must migrate your legacy archive data (whether it’s current on-premises or in the cloud) to an archive hosted and managed in your own hyperscale cloud tenancy.

The best known names in this space are, of course, Microsoft and Amazon. Coupling the power of their hyperscale clouds with the right PaaS-based information management and archiving solution will provide you with complete control over your data, with files stored in their native format, and best-in-class security and data management features included. Not only that, but you are also free to harness cloud-scale AI and Machine Learning to unlock crucial business insight from your data, plus automate data management and handling for accurate supervision, predictive surveillance, auto-classification, and eDiscovery response. This is the modern cloud in all its glory, offering a wide-ranging set of essential, future-proof features that SaaS archives simply can’t match.

Your own hyperscale cloud should give you…

- Immediate access to your data in its native format

- Full control of where data is stored for data sovereignty

- Complete, customizable control over security, compliance, and privacy

- Flexibility and on-demand scalability – use only what you need

- Reduced upfront investment and predictable ongoing costs – CapEx versus OpEx

- The ability to move your data out of the archive without paying extraction fees – (aka ransom fees)

- Faster, scalable eDiscovery searches and case management, as well as customizable retention/disposition (defensible disposition) policy controls for regulatory compliance

- Access to the latest AI and ML technology for auto-data classification, data mining, and analysis

- The ability to granularly search and review content within audio, video and social media files

Download Now

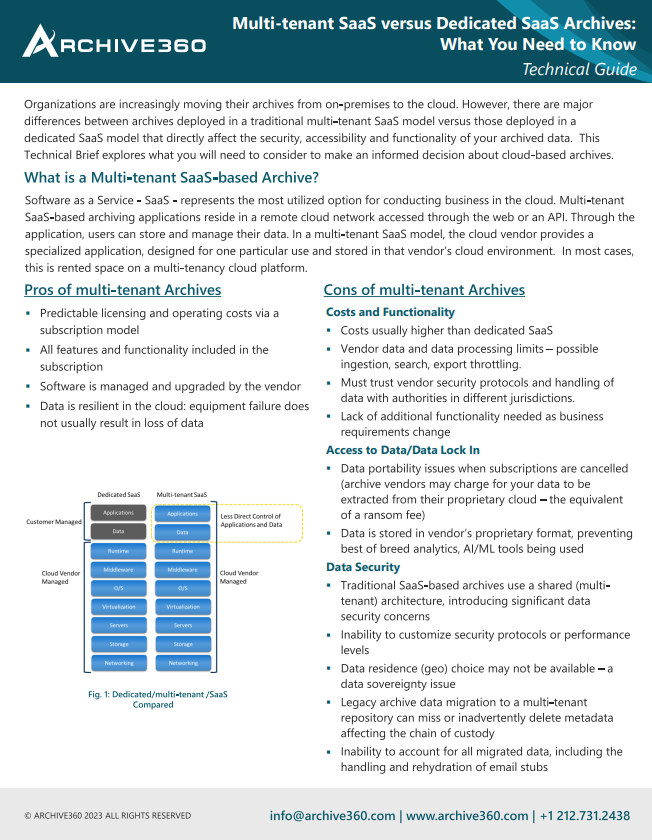

Multi-Tenant SaaS vs Dedicated SaaS: what you need to know

All cloud archives are not created equal. There are major differences between archives deployed in a dedicated SaaS model versus a multi-tenant model that affect the security, accessibility and functionality of your archived data. This Technical Guide explores what you will need to consider in order to make an informed decision.

Archive360 Open Archive vs SaaS Archives

Discover all the benefits of Archive360's Archive2Azure

| Features | SaaS Archives | Open Archive |

| Infrastructure | ||

| Host data in your corporate cloud | ||

| Security | ||

| Protect Data with encryption keys | ||

| Compliance | ||

| Meet SEC 17a-4 regulations | ||

| Meet GDPR Regulations | Limited | |

| Policy-driven records | Limited | |

| Compliant onboarding | ||

| Performance | ||

| Active user-based pricing | ||

| Management | ||

| Standard eDiscovery with case management | ||

| AI-powered eDiscovery | ||

| Manage any content/data type | Limited | |

| Records analysis, classification and management | Limited | |

| Policy-driven records classification and transformation | ||

| Data Loss Prevention and sensitive data analysis alerts | ||

| Export and produce data for third party consumption | ||

| Role-based access with Active Directory Integration | Limited | |

| Native import and export with O365, SharePoint Online and OneDrive | Limited | |

| Onboarding | ||

| Accelerated onboarding at 50 TB per day | ||

| Restore legacy archives back to native format | ||

| Pricing | ||

| Active user-based pricing | ||

| Interactive Users - free of charge (restrictions apply) |

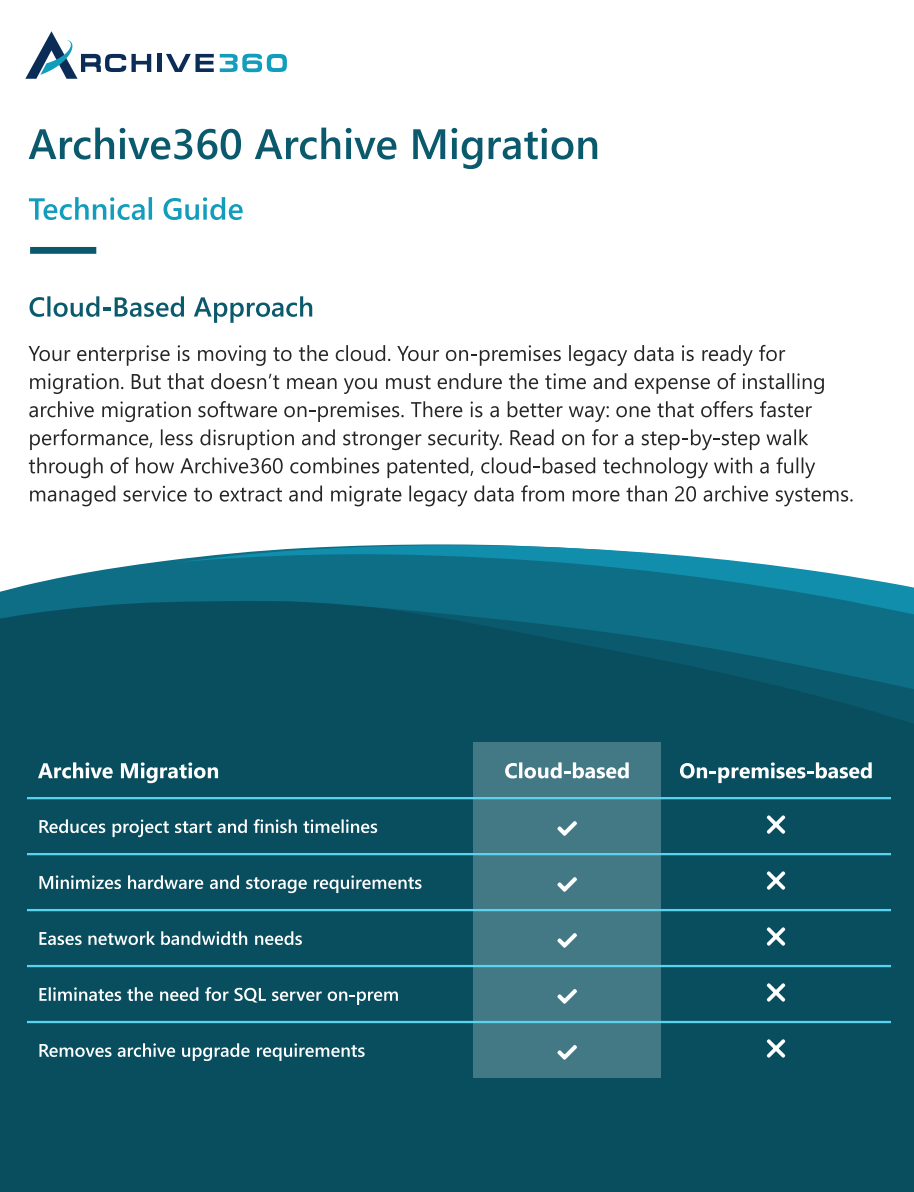

Technical Guide

Archive360 Archive Migration

A guide to Archive360's cloud-based approach to extract and migrate legacy data

Migrating Your Data Out of Enterprise Vault

If you’ve made up your mind to move to a new, more capable cloud platform, one of your first considerations will be what to do with the terabytes or even petabytes of data stored in your legacy EV archive. The answer is to find a partner to help you migrate that data quickly, seamlessly, in a legally defensible manner, and painlessly to the new cloud without the risk of leaving any of it behind, losing it in route, or interrupting the day-to-day operations of your business.

There a many companies offering such a service, including QuadroTech and Transvault, but the key is to find the best high performance and secure email migration tool for your needs. A tool that is capable of not only moving your data to the cloud at the highest possible speed, but one that ensures the migration is complete, accurate, and legally defensible - which includes protecting the chain of custody.

As you make a decision on your migration partner, you should also think about whether you want to archive or journal in your new cloud. Whether you wish to move your existing archived data to Office 365, another email archive, or to an archive hosted in your company’s own hyper-scale cloud tenancy, Archive360 can help.

Archive Migration

Our combination of experience and cloud-based automation is going to make you look really good.

- Complete chain-of-custody and exception reporting down to the item level

- Advanced features for managing your stubs to ensure your end-users experience zero disruption

- Unique approach to handling journal archives your legal and compliance teams will appreciate

- Many destination options including Office 365 and dozens of other cloud-based and on-premises archives

Open Archiving

We interviewed 1,100 customers to learn what they loved and hated about their legacy archive and used that insight to create a completely new kind of archive platform.

- More secure: If you don't control your encryption keys, you don't control your data. With our archive, only you have access to your encryption keys.

- More private: Privacy laws require you to retain data in the region it was created. SaaS archives don't do that. Our archive automatically stores employee and customer data in the country of origin.

- More insight: Your archive holds incredible insight that until now has been trapped in a black box. Our archive empowers you to extract intelligence from your emails, files and videos for the first time.

Considering Journals and Journal Migrations

Data repositories are growing larger, more complex, and more wide-ranging by the day for every organization. This is coupled with an increasingly stringent regulatory and legal climate, with new, stricter regulatory retention requirements being added every year.

The chances are your organization must either comply with the financial services industry’s very prescriptive SEC 17, FINRA, and MiFID II requirements, have a legal need to ensure ongoing litigation hold requirement of specific custodian data, or want to conduct targeted internal investigations.

The need to capture, immutably store, index/make searchable, audit, and export (with all metadata intact) has radically expanded beyond everyday email. Your compliance journals may need to include content from collaboration platforms like Bloomberg Chat, Slack, Salesforce , social media platforms, Microsoft Teams, and many other sources. [ view all Archive360 connectors ]

Migrating a legacy Enterprise Vault journal in a legally defensible manner is not easy and should only be done by those with a long history of experience and success. Some migration vendors will tell you that “exploding” or “splitting” journals so that they can be inserted into Office 365 is the best process. Microsoft frowns on this practice and instead suggests that the legacy journals and ongoing journal streams from Office 365 be migrated into another third-party cloud archive. Some companies have chosen to migrate their journals into a SaaS cloud infrastructure but with the issues already highlighted with SaaS clouds, the better choice is to move them into your own, company-controlled cloud tenancy.

Your Migration Questions Answered

Archive360 understands how big a decision it is to move to the hyperscale cloud and appreciates the many concerns you might have. Having carried out over a thousand successful legacy archive migrations, we’re well aware of the questions you need answers to and are able to dispel your fears in our initial discussions. The conversations we have at the start of a migration normally include answering questions like…

- How much data do I actually have in my archive and what kind of data is it (formats)?

- Is there a cost to move my data out of my legacy archive? If so, what is it?

- Does the archived data need to be reconverted back to its original format?

- How much “dark data” do I have (such as archived messages from inactive users and leavers)?

- Do I need to migrate the entire archive and/or just the journal archive?

- I have ongoing or pending litigation; can I still migrate my archived mail?

- How do I manage archived email that is under legal hold? What special handling is needed to maintain chain of custody?

- Will I be able to account for 100% of my archived mail?

- How long will the migration take?

- Will the migration affect my end-users productivity?

- Does the legacy EV archive include stubs or pointers to email in the live email system? If not handled correctly, incorrectly migrated stubs can serious legal and regulatory issues.

Learn More

Archive360 Email Migration Software Product Highlights

Archive360 offers the most trusted archive and legacy data migration solution available - specifically designed for the Enterprise Vault archive. Fully integrated with the solution’s APIs for faster, more accurate data extractions, Archive360 extracts messages and attachments, including all metadata, directly from the archive, and maintains an item-level audit trail for compliance and legal reporting (chain of custody).

Archive360 Enterprise Vault migration detail:

- Utilizes a multi-threaded, multi-server architecture

Provides the highest performance and accuracy of all migration solutions - Uses native Enterprise Vault web APIs

Enables search and filtering of emails by custodian, date range and other criteria - Does not require indexing or data gathering before extraction

Begin message extraction within minutes of installation unlike other solutions that take days or weeks to index before project start - Message level chain of custody reporting

Legally defensible reporting reduces eDiscovery risk - Intuitive and powerful graphical user interface

Less time needed for training – faster time to migration

The Cloud Archive Organizations Trust

Archive360 provides the cloud archive and information management platform trusted most by enterprises and government agencies worldwide, purpose-built to run in the hyperscale cloud. Installed and run from your organization’s individual public cloud tenancy, you retain all the power, flexibility, and management capability while maintaining complete control of your data and its security, including encryption keys that only you have access to. Additionally, unlike on-premises and SaaS archiving solutions, you are free to unlock valuable insights via data analytics and carry out powerful searches on your data using the latest cloud-based tools that will benefit multiple teams across your business, from HR to legal and compliance.

Archive Migration Connectors

Archive360 has successfully helped more than 2,000 customers extract data from 20+ enterprise archives, legacy applications, and file system repositories, including the following:

(click on the link for more information)

- ArchiveOne C2C

- Autonomy EAS

- Autonomy NearPoint

- Autonomy Consolidated Archive

- EMC EmailXtender

- EMC SourceOne

- Gwava Retain

- Dell EMS MessageOne

- Dell Quest Archive Manager

- HPCA

- Opentext AXS-One

(Exchange and IBM Notes) - Opentext Email Archive

(Exchange and IBM Notes) - Opentext IXOS

(Exchange and IBM Notes) - Commvault Simpana

- Zovy Archive

- Metalogix

- PSTs

- IBM NSFs

.png)